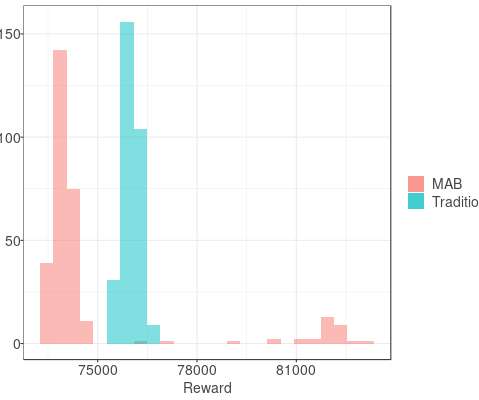

Changing assignment weights with time-based confounders

The Unofficial Google Data Science Blog

JULY 22, 2020

Instead, we focus on the case where an experimenter has decided to run a full traffic ramp-up experiment and wants to use the data from all of the epochs in the analysis. When there are changing assignment weights and time-based confounders, this complication must be considered either in the analysis or the experimental design.

Let's personalize your content