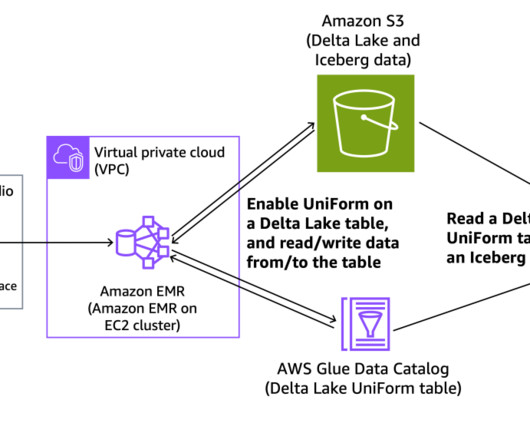

Expand data access through Apache Iceberg using Delta Lake UniForm on AWS

AWS Big Data

NOVEMBER 14, 2024

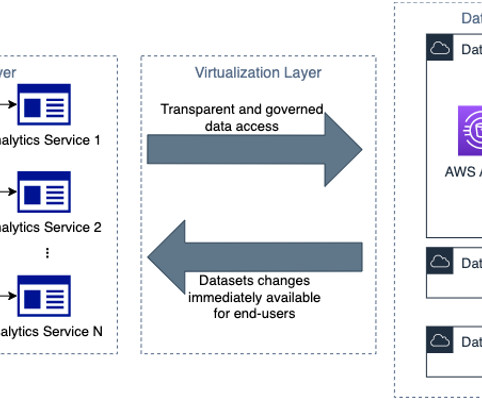

The landscape of big data management has been transformed by the rising popularity of open table formats such as Apache Iceberg, Apache Hudi, and Linux Foundation Delta Lake. These formats, designed to address the limitations of traditional data storage systems, have become essential in modern data architectures.

Let's personalize your content