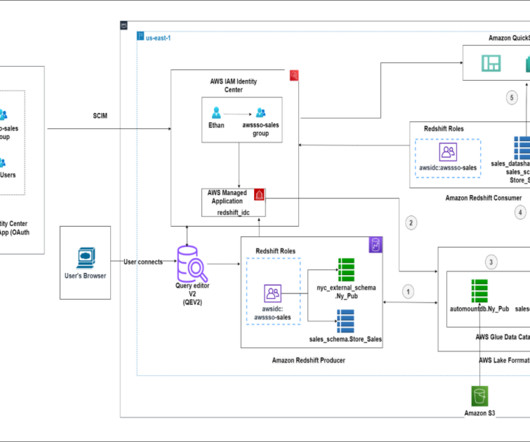

Seamless integration of data lake and data warehouse using Amazon Redshift Spectrum and Amazon DataZone

AWS Big Data

AUGUST 15, 2024

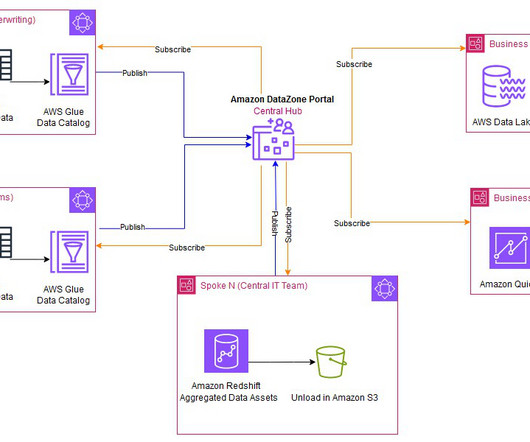

Unlocking the true value of data often gets impeded by siloed information. Traditional data management—wherein each business unit ingests raw data in separate data lakes or warehouses—hinders visibility and cross-functional analysis. Business units access clean, standardized data.

Let's personalize your content