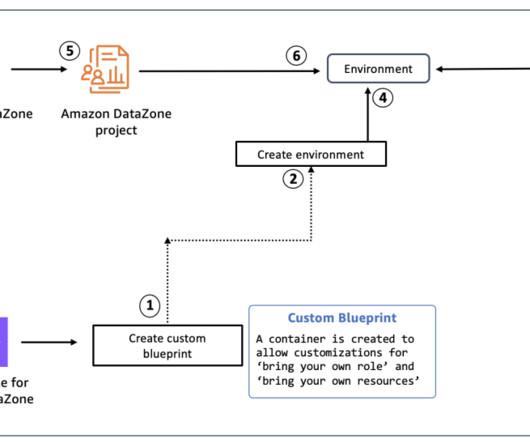

Seamless integration of data lake and data warehouse using Amazon Redshift Spectrum and Amazon DataZone

AWS Big Data

AUGUST 15, 2024

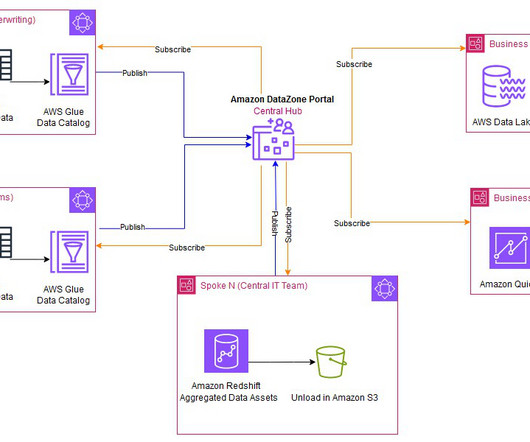

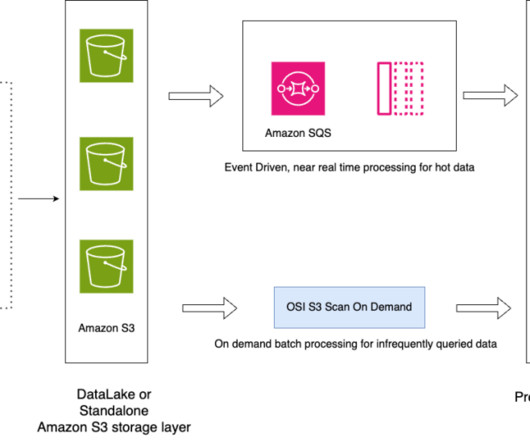

Unifying these necessitates additional data processing, requiring each business unit to provision and maintain a separate data warehouse. This burdens business units focused solely on consuming the curated data for analysis and not concerned with data management tasks, cleansing, or comprehensive data processing.

Let's personalize your content