Towards optimal experimentation in online systems

The Unofficial Google Data Science Blog

APRIL 23, 2024

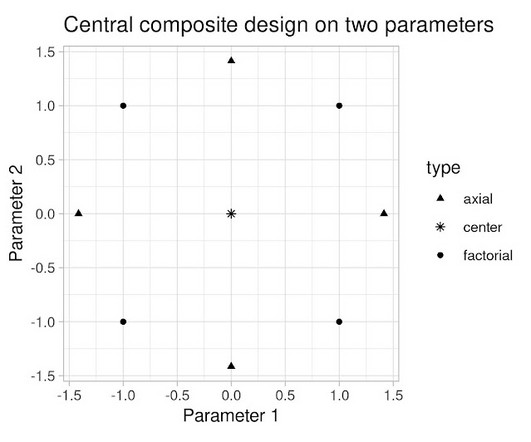

Experiments, Parameters and Models At Youtube, the relationships between system parameters and metrics often seem simple — straight-line models sometimes fit our data well. That is true generally, not just in these experiments — spreading measurements out is generally better, if the straight-line model is a priori correct.

Let's personalize your content