Rethinking ‘Big Data’ — and the rift between business and data ops

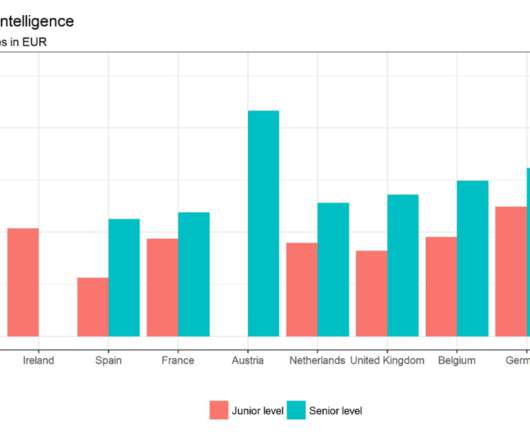

CIO Business Intelligence

MAY 7, 2024

Still, CIOs should not be too quick to consign the technologies and techniques touted during the honeymoon period (circa 2005-2015) of the Big Data Era to the dust bin of history. Big Data” is a critical area that runs the risk of being miscategorized as being either irrelevant — a thing of the past or lacking a worth-the-trouble upside.

Let's personalize your content