Using Text Mining on Reviews Data to Generate Business Insights!

Analytics Vidhya

OCTOBER 9, 2022

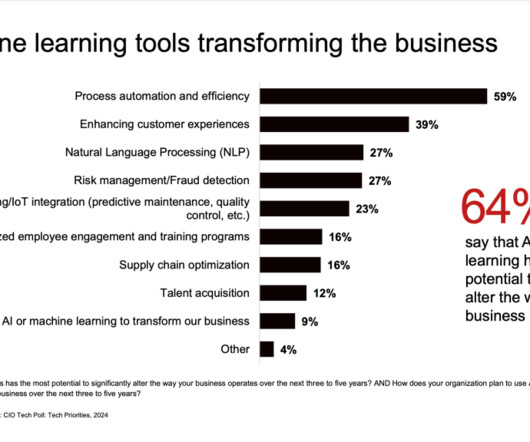

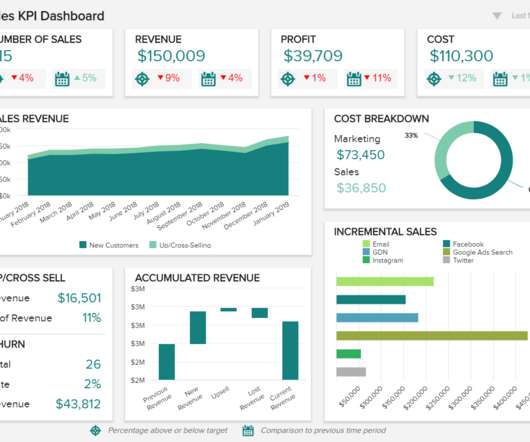

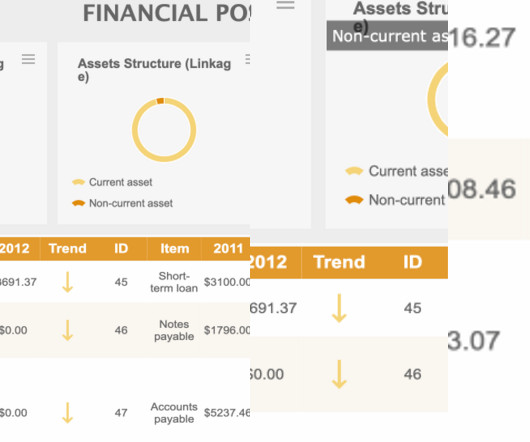

Introduction Textual data from social media posts, customer feedback, and reviews are valuable resources for any business. There is a host of useful information in such unstructured data that we can discover. Making sense of this unstructured data can help companies better understand […].

Let's personalize your content