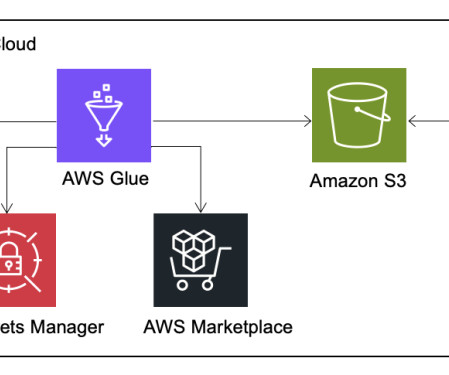

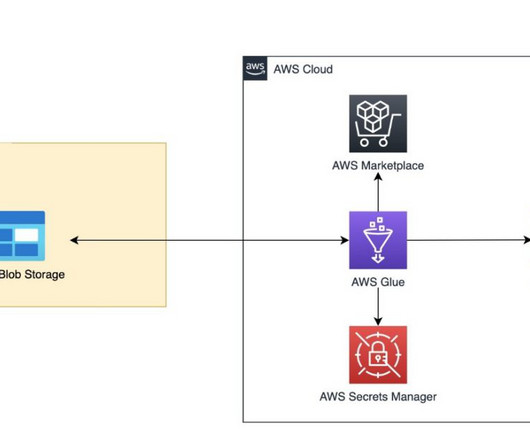

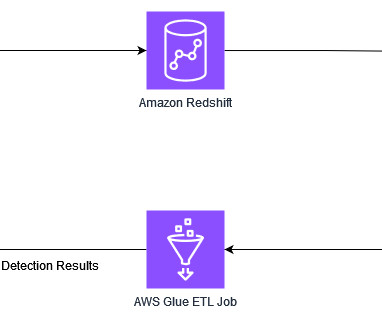

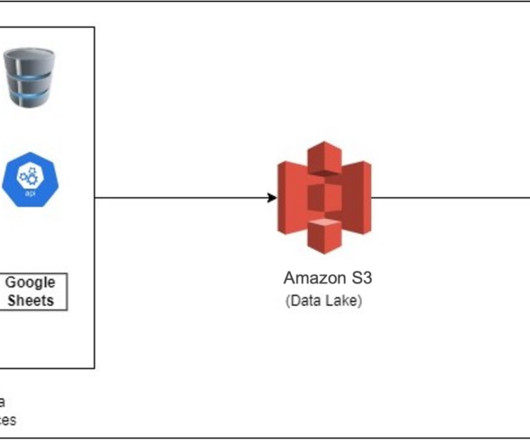

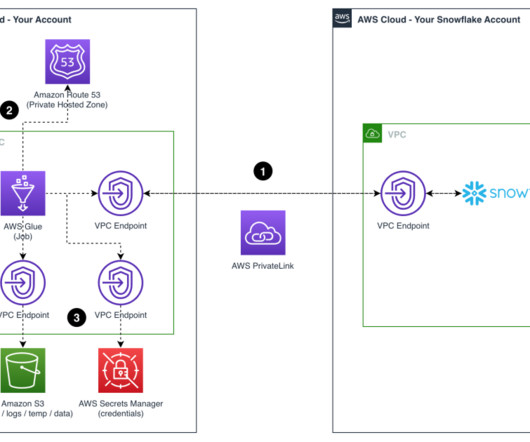

Migrate Delta tables from Azure Data Lake Storage to Amazon S3 using AWS Glue

AWS Big Data

SEPTEMBER 10, 2024

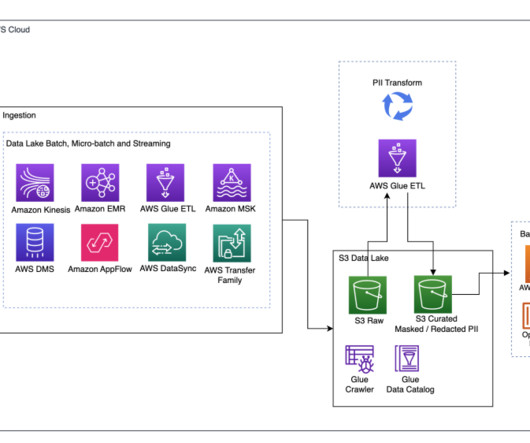

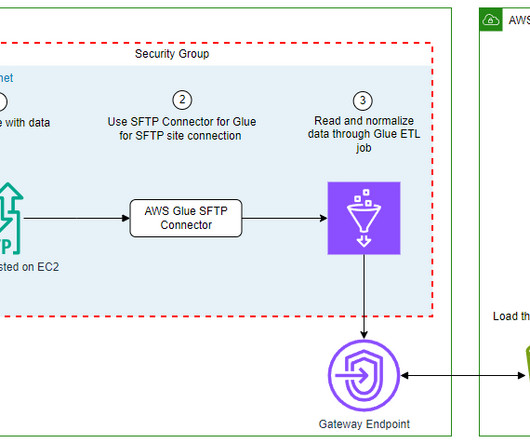

We often see requests from customers who have started their data journey by building data lakes on Microsoft Azure, to extend access to the data to AWS services. In such scenarios, data engineers face challenges in connecting and extracting data from storage containers on Microsoft Azure.

Let's personalize your content