AWS Glue for Handling Metadata

Analytics Vidhya

AUGUST 19, 2022

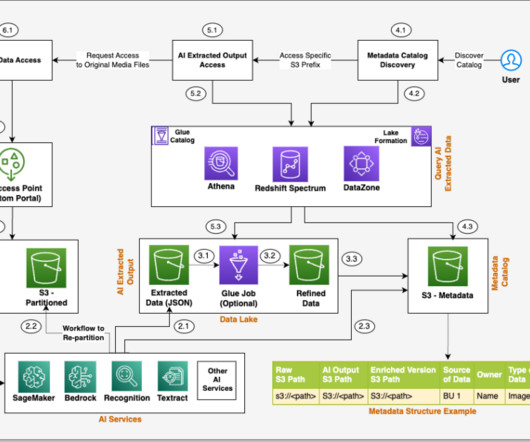

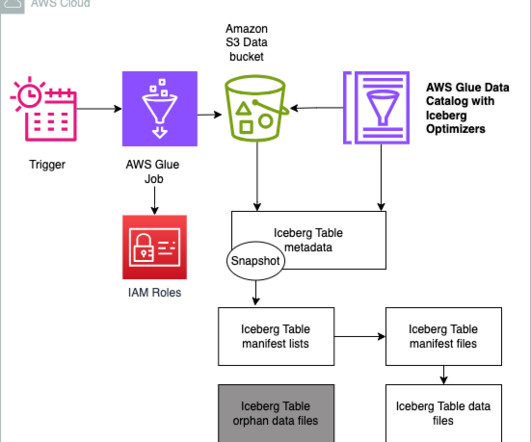

Introduction AWS Glue helps Data Engineers to prepare data for other data consumers through the Extract, Transform & Load (ETL) Process. The managed service offers a simple and cost-effective method of categorizing and managing big data in an enterprise. It provides organizations with […].

Let's personalize your content