2021 Data/AI Salary Survey

O'Reilly on Data

SEPTEMBER 15, 2021

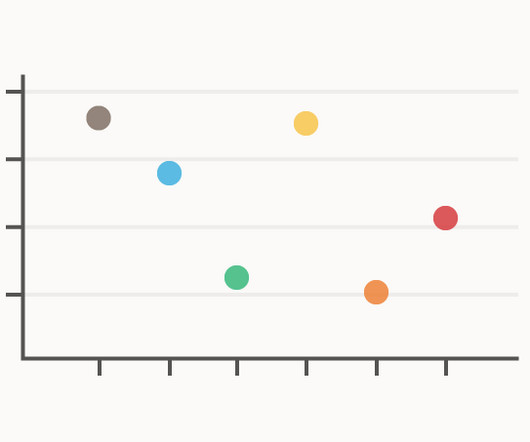

64% of the respondents took part in training or obtained certifications in the past year, and 31% reported spending over 100 hours in training programs, ranging from formal graduate degrees to reading blog posts. The tools category includes tools for building and maintaining data pipelines, like Kafka. Salaries by Programming Language.

Let's personalize your content