An AI Chat Bot Wrote This Blog Post …

DataKitchen

DECEMBER 9, 2022

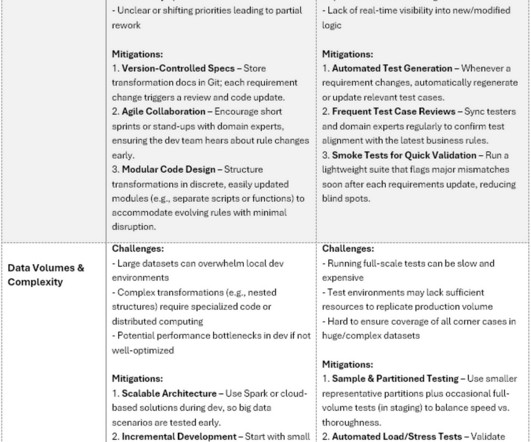

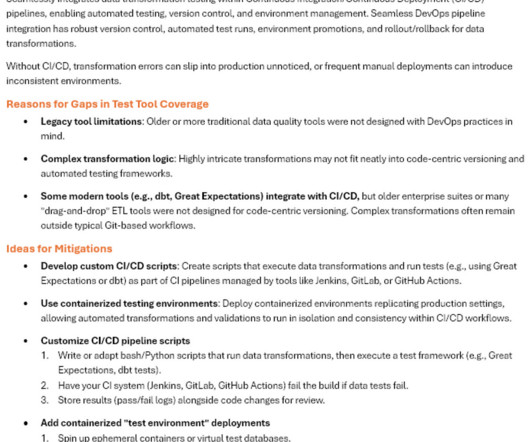

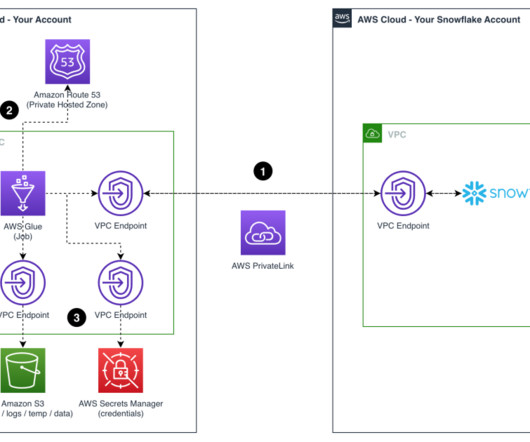

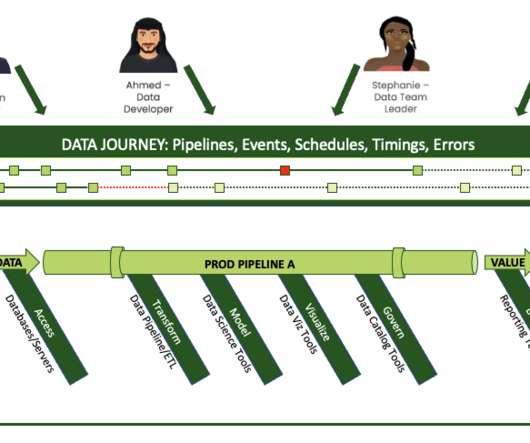

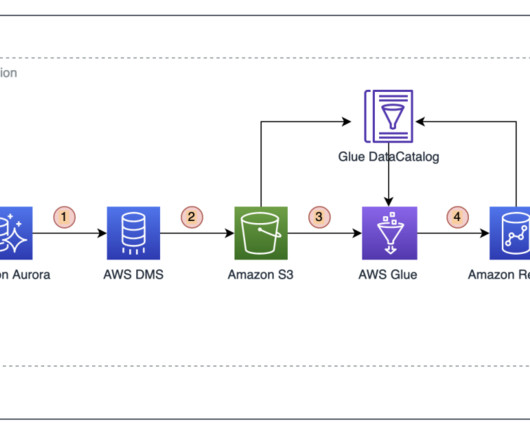

DataOps automation typically involves the use of tools and technologies to automate the various steps of the data analytics and machine learning process, from data preparation and cleaning, to model training and deployment. The data scientists and IT professionals were amazed, and they couldn’t believe their eyes.

Let's personalize your content