Enriching metadata for accurate text-to-SQL generation for Amazon Athena

AWS Big Data

OCTOBER 14, 2024

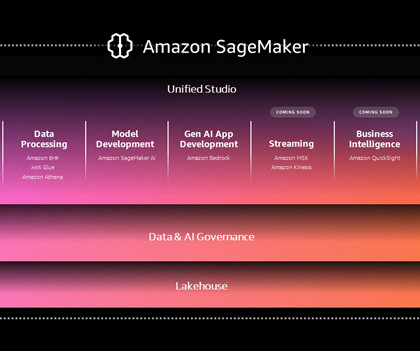

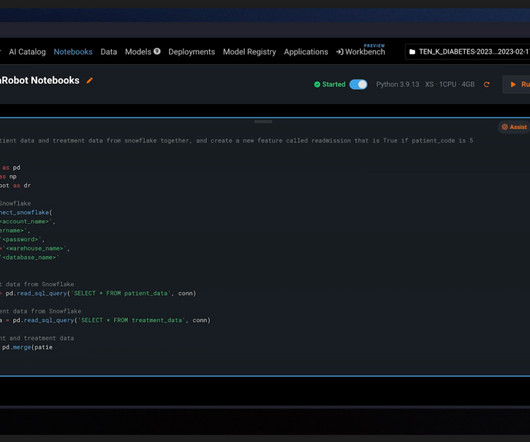

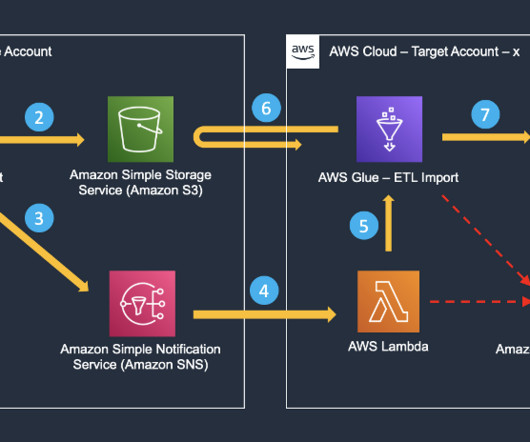

Enterprise data is brought into data lakes and data warehouses to carry out analytical, reporting, and data science use cases using AWS analytical services like Amazon Athena , Amazon Redshift , Amazon EMR , and so on. Table metadata is fetched from AWS Glue. The generated Athena SQL query is run.

Let's personalize your content