Accelerate your migration to Amazon OpenSearch Service with Reindexing-from-Snapshot

AWS Big Data

NOVEMBER 22, 2024

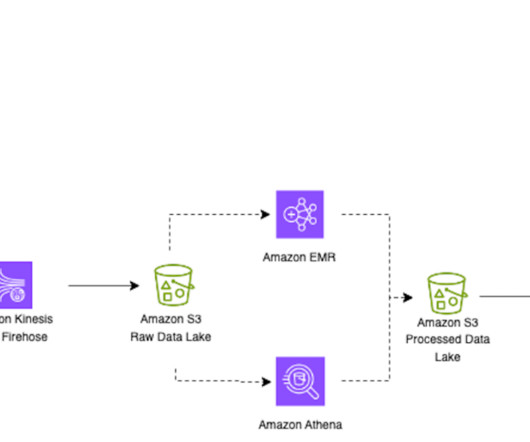

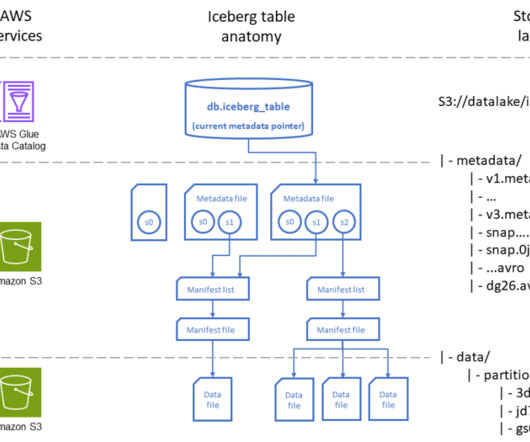

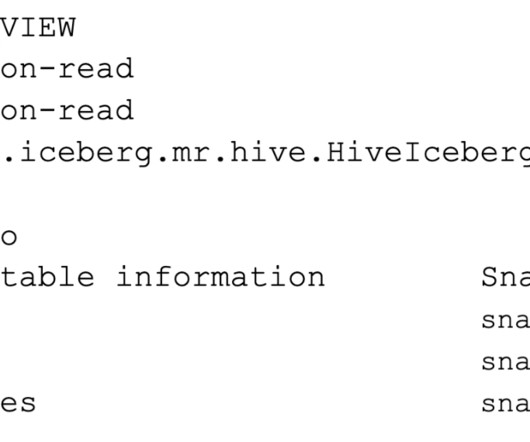

In this post, we will introduce a new mechanism called Reindexing-from-Snapshot (RFS), and explain how it can address your concerns and simplify migrating to OpenSearch. Documents are parsed from the snapshot and then reindexed to the target cluster, so that performance impact to the source clusters is minimized during migration.

Let's personalize your content