Amazon Redshift announcements at AWS re:Invent 2023 to enable analytics on all your data

AWS Big Data

NOVEMBER 29, 2023

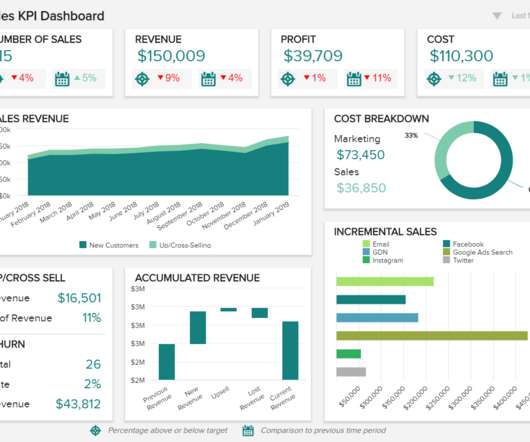

In 2013, Amazon Web Services revolutionized the data warehousing industry by launching Amazon Redshift , the first fully-managed, petabyte-scale, enterprise-grade cloud data warehouse. Amazon Redshift made it simple and cost-effective to efficiently analyze large volumes of data using existing business intelligence tools.

Let's personalize your content