What is data architecture? A framework to manage data

CIO Business Intelligence

DECEMBER 20, 2024

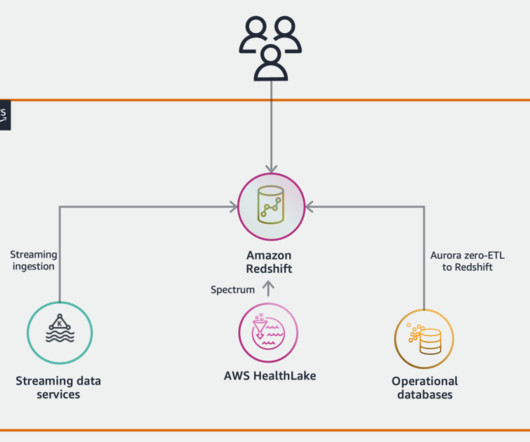

Data architecture goals The goal of data architecture is to translate business needs into data and system requirements, and to manage data and its flow through the enterprise. Many organizations today are looking to modernize their data architecture as a foundation to fully leverage AI and enable digital transformation.

Let's personalize your content