Accelerate your data workflows with Amazon Redshift Data API persistent sessions

AWS Big Data

NOVEMBER 22, 2024

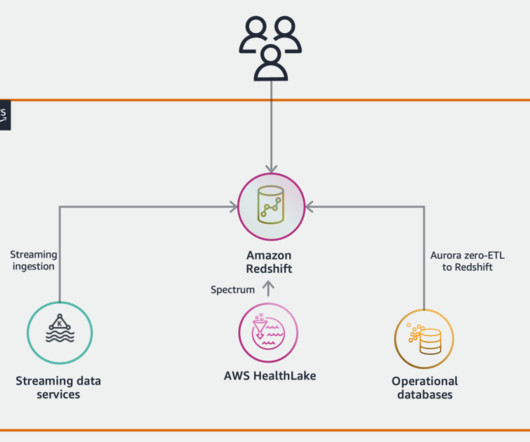

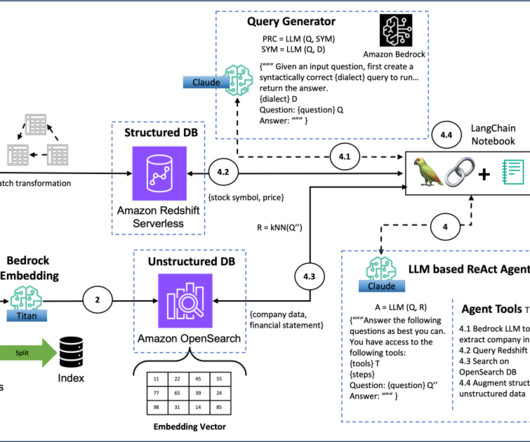

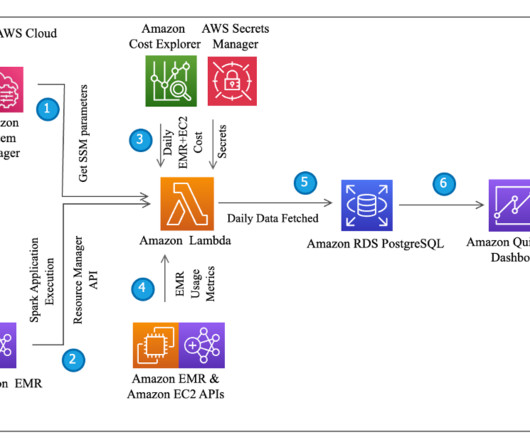

Amazon Redshift is a fast, scalable, secure, and fully managed cloud data warehouse that you can use to analyze your data at scale. With Data API session reuse, you can use a single long-lived session at the start of the ETL pipeline and use that persistent context across all ETL phases.

Let's personalize your content