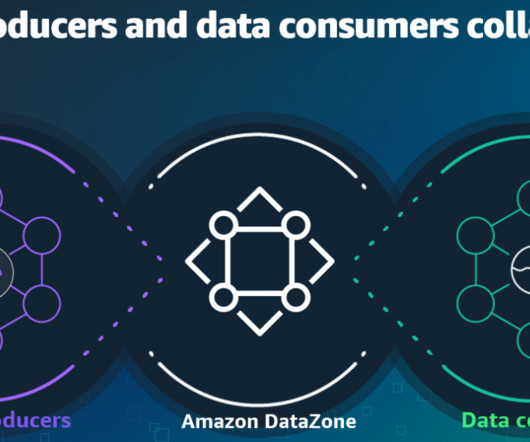

How EUROGATE established a data mesh architecture using Amazon DataZone

AWS Big Data

JANUARY 15, 2025

cycle_end"', "sagemakedatalakeenvironment_sub_db", ctas_approach=False) A similar approach is used to connect to shared data from Amazon Redshift, which is also shared using Amazon DataZone. The consumer subscribes to the data product from Amazon DataZone and consumes the data with their own Amazon Redshift instance.

Let's personalize your content