Incremental refresh for Amazon Redshift materialized views on data lake tables

AWS Big Data

NOVEMBER 8, 2024

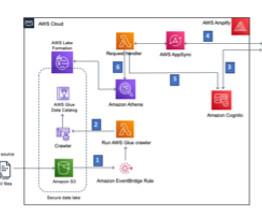

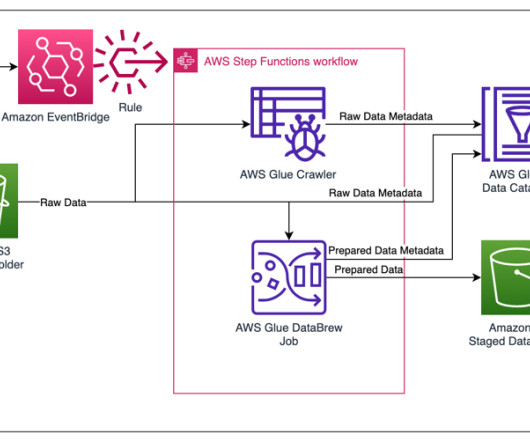

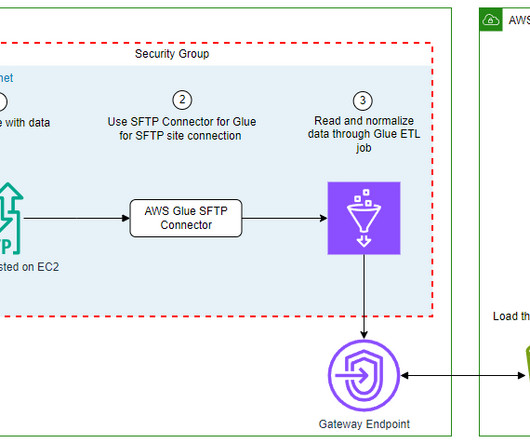

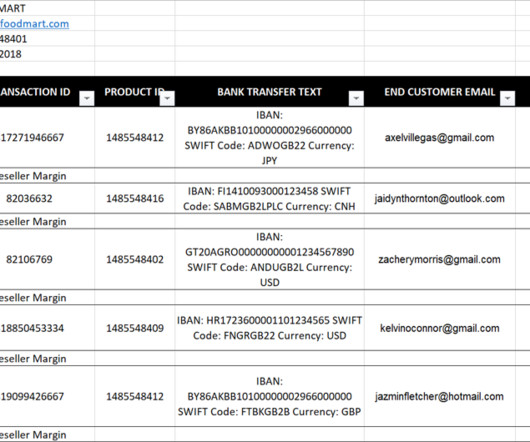

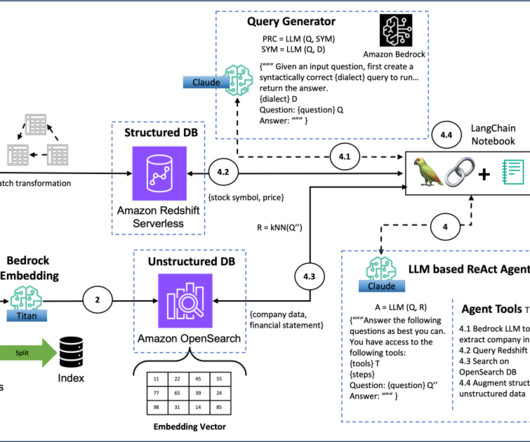

Amazon Redshift is a fast, fully managed cloud data warehouse that makes it cost-effective to analyze your data using standard SQL and business intelligence tools. Customers use data lake tables to achieve cost effective storage and interoperability with other tools. The sample files are ‘|’ delimited text files.

Let's personalize your content