Huawei unveils four strategic directions for the future of finance

CIO Business Intelligence

JUNE 19, 2023

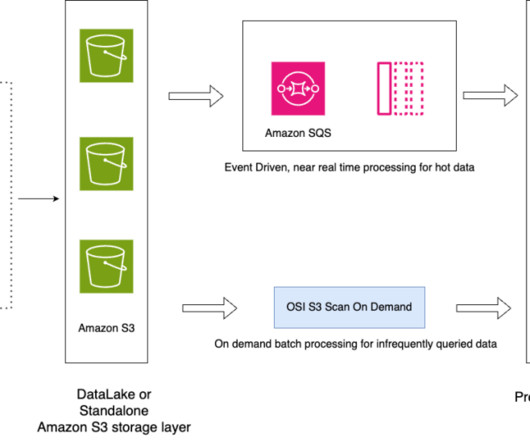

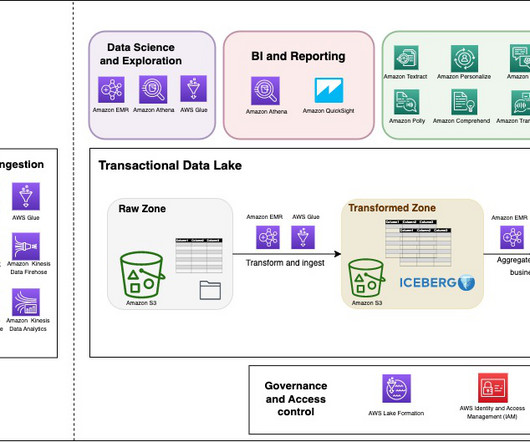

“We use the peer-to-peer computing of CPUs, GPUs, and NPUs to enable the multi-active data center to run efficiently, just like a computer,” Mr. Cao told the conference. The distributed architecture now outperforms the original core host platform, notably with lower latency. Huawei’s new Data Intelligence Solution 3.0

Let's personalize your content