Data’s dark secret: Why poor quality cripples AI and growth

CIO Business Intelligence

APRIL 8, 2025

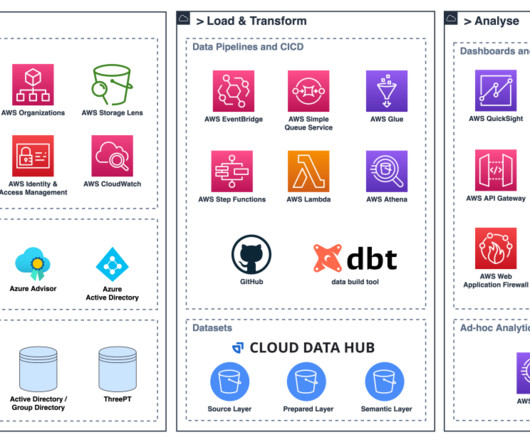

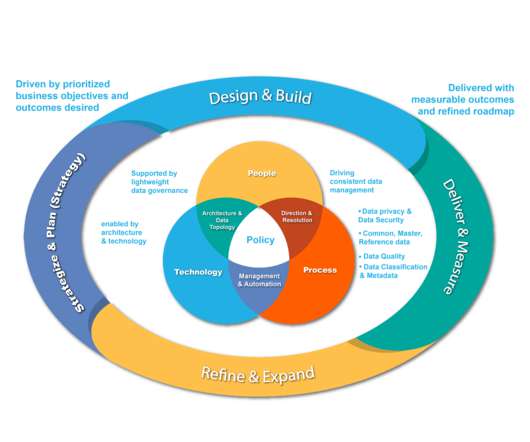

Data is the foundation of innovation, agility and competitive advantage in todays digital economy. As technology and business leaders, your strategic initiatives, from AI-powered decision-making to predictive insights and personalized experiences, are all fueled by data. Data quality is no longer a back-office concern.

Let's personalize your content