Improve Business Agility by Hiring a DataOps Engineer

DataKitchen

DECEMBER 20, 2020

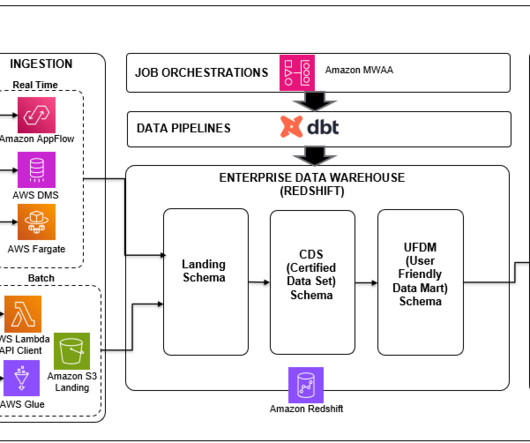

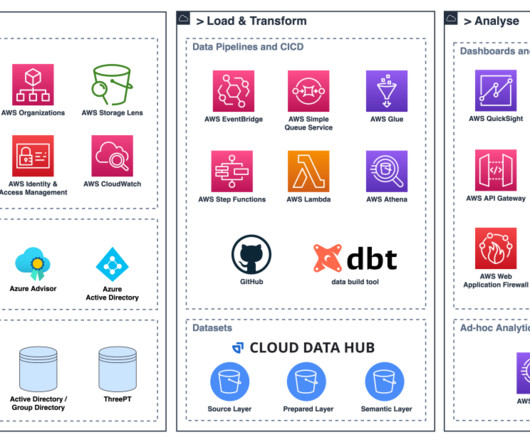

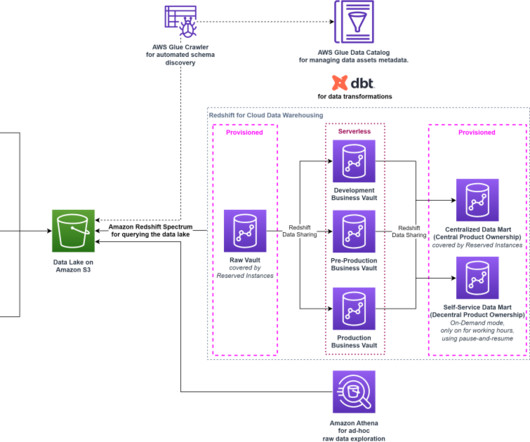

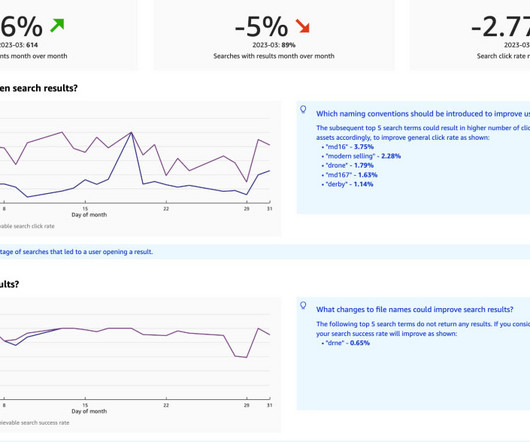

DataOps Engineers implement the continuous deployment of data analytics. They give data scientists tools to instantiate development sandboxes on demand. They automate the data operations pipeline and create platforms used to test and monitor data from ingestion to published charts and graphs.

Let's personalize your content