Migrate a petabyte-scale data warehouse from Actian Vectorwise to Amazon Redshift

AWS Big Data

MAY 30, 2024

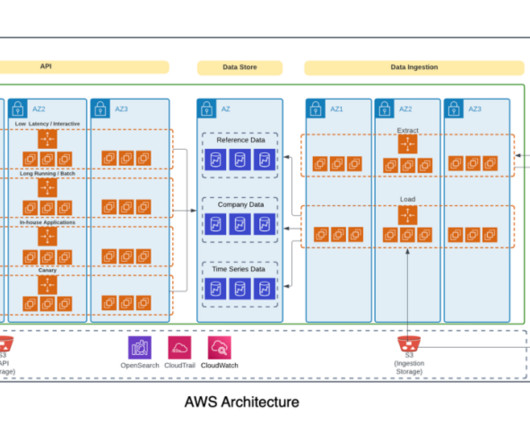

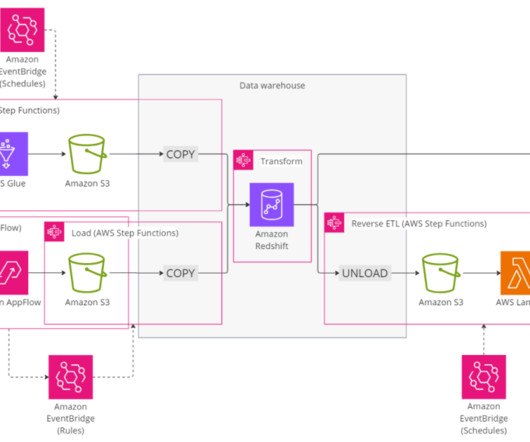

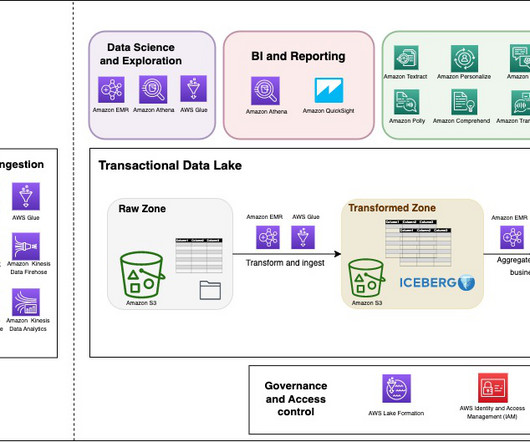

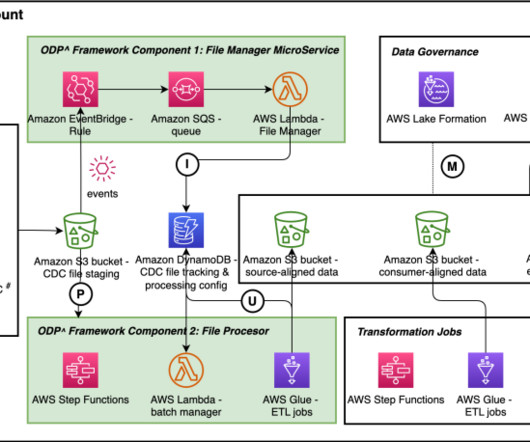

Amazon Redshift is a fast, scalable, and fully managed cloud data warehouse that allows you to process and run your complex SQL analytics workloads on structured and semi-structured data. Data ingestion – Pentaho was used to ingest data sourced from multiple data publishers into the data store.

Let's personalize your content