Manage concurrent write conflicts in Apache Iceberg on the AWS Glue Data Catalog

AWS Big Data

APRIL 8, 2025

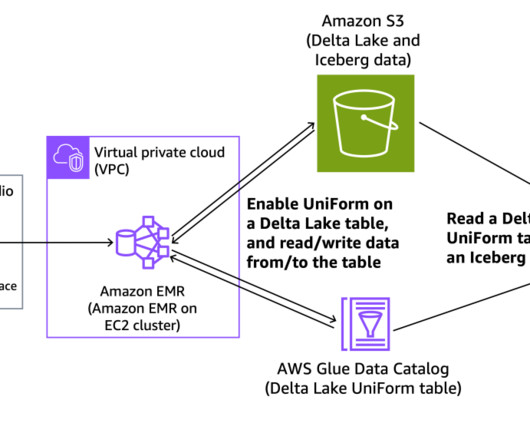

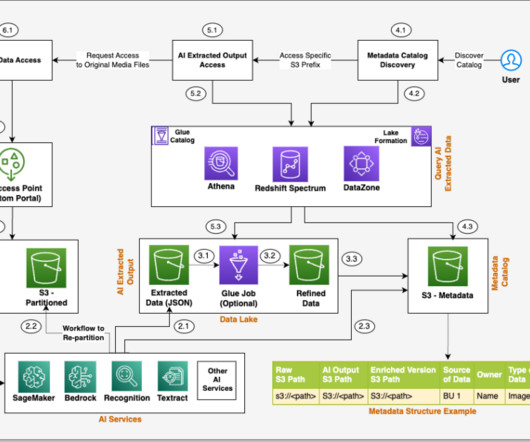

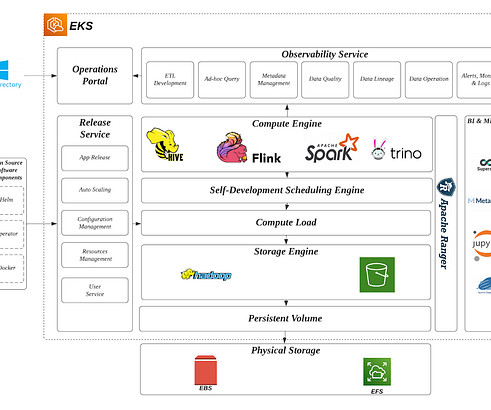

In modern data architectures, Apache Iceberg has emerged as a popular table format for data lakes, offering key features including ACID transactions and concurrent write support. However, commits can still fail if the latest metadata is updated after the base metadata version is established.

Let's personalize your content