Mastering Multi-Cloud with Cloudera: Strategic Data & AI Deployments Across Clouds

Cloudera

JANUARY 7, 2025

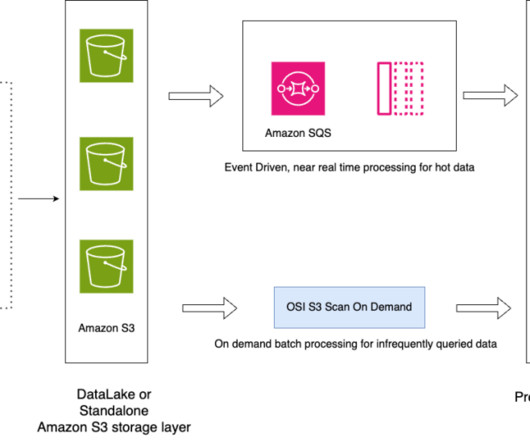

Adopting hybrid and multi-cloud models provides enterprises with flexibility, cost optimization, and a way to avoid vendor lock-in. A prominent public health organization integrated data from multiple regional health entities within a hybrid multi-cloud environment (AWS, Azure, and on-premise). Why Hybrid and Multi-Cloud?

Let's personalize your content