When is data too clean to be useful for enterprise AI?

CIO Business Intelligence

NOVEMBER 27, 2024

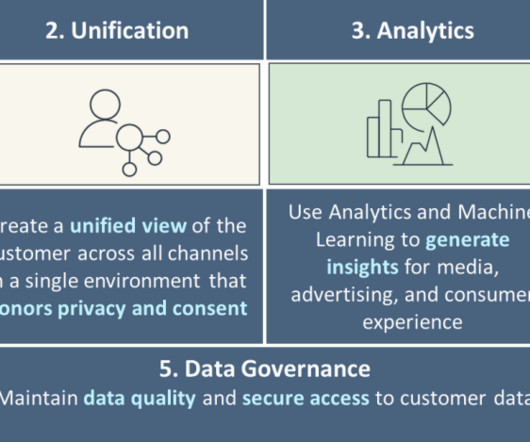

Once the province of the data warehouse team, data management has increasingly become a C-suite priority, with data quality seen as key for both customer experience and business performance. But along with siloed data and compliance concerns , poor data quality is holding back enterprise AI projects.

Let's personalize your content