Author visual ETL flows on Amazon SageMaker Unified Studio (preview)

AWS Big Data

DECEMBER 4, 2024

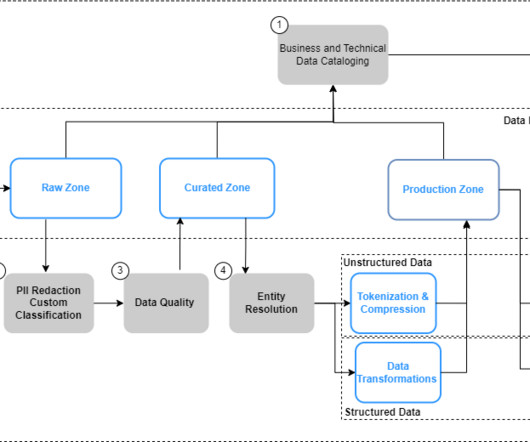

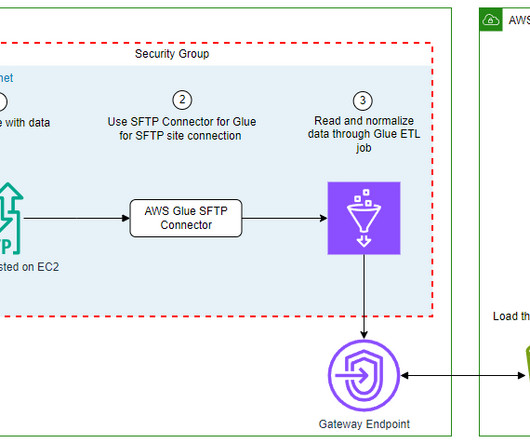

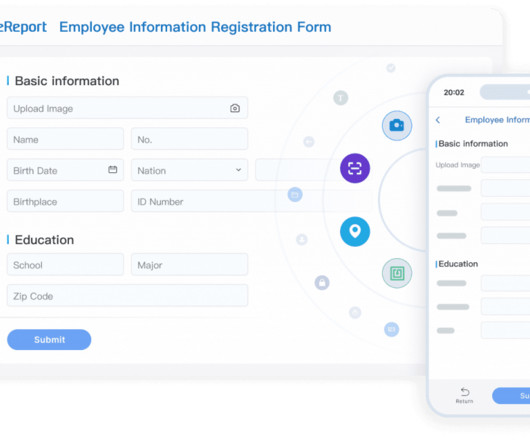

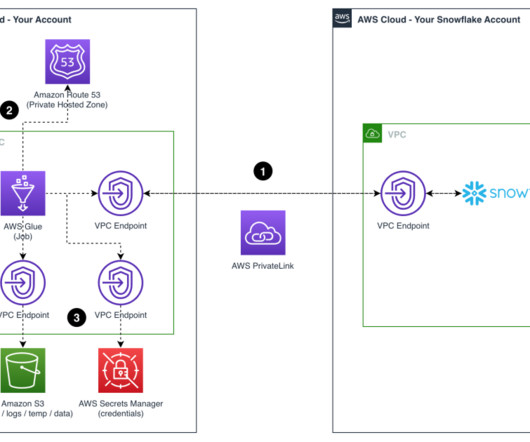

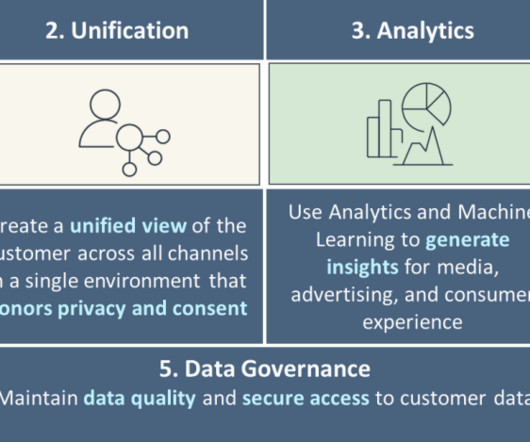

This experience includes visual ETL, a new visual interface that makes it simple for data engineers to author, run, and monitor extract, transform, load (ETL) data integration flow. You can use a simple visual interface to compose flows that move and transform data and run them on serverless compute.

Let's personalize your content