From data lakes to insights: dbt adapter for Amazon Athena now supported in dbt Cloud

AWS Big Data

NOVEMBER 22, 2024

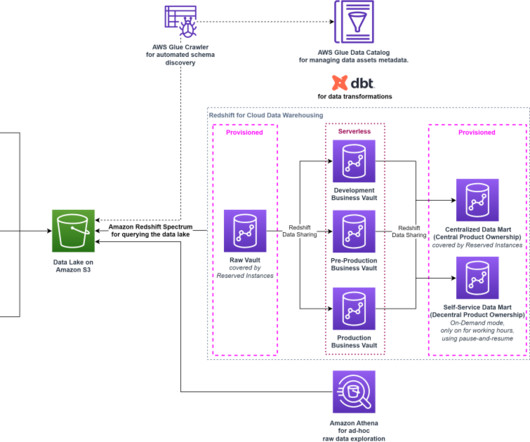

The need for streamlined data transformations As organizations increasingly adopt cloud-based data lakes and warehouses, the demand for efficient data transformation tools has grown. With dbt, teams can define data quality checks and access controls as part of their transformation workflow.

Let's personalize your content