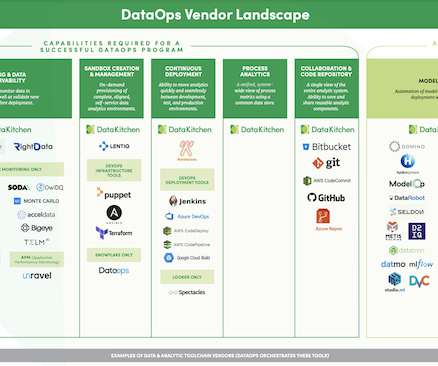

DataOps: Managing the Process and Technology

David Menninger's Analyst Perspectives

OCTOBER 7, 2020

Organizations needed to make sure those processes were completed successfully—and reliably—so they had the data necessary to make informed business decisions. The result was battle-tested integrations that could withstand the test of time.

Let's personalize your content