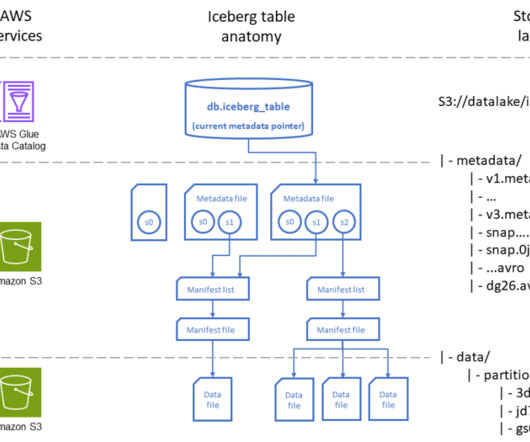

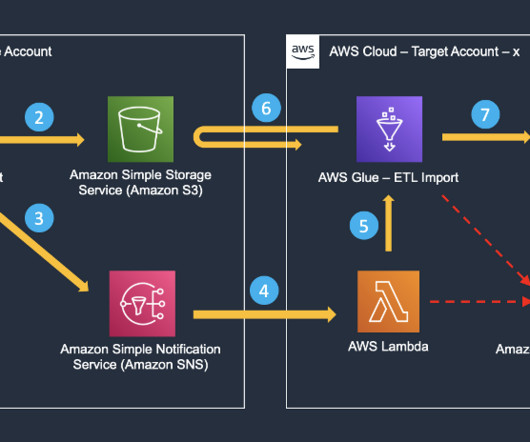

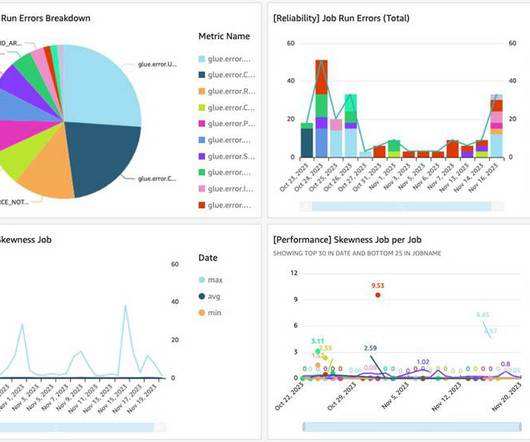

Monitoring Apache Iceberg metadata layer using AWS Lambda, AWS Glue, and AWS CloudWatch

AWS Big Data

JULY 29, 2024

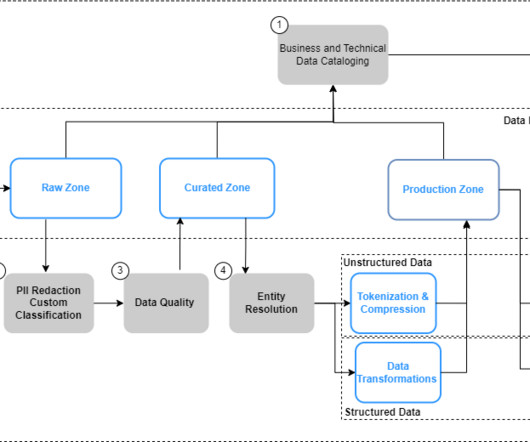

In the era of big data, data lakes have emerged as a cornerstone for storing vast amounts of raw data in its native format. They support structured, semi-structured, and unstructured data, offering a flexible and scalable environment for data ingestion from multiple sources.

Let's personalize your content