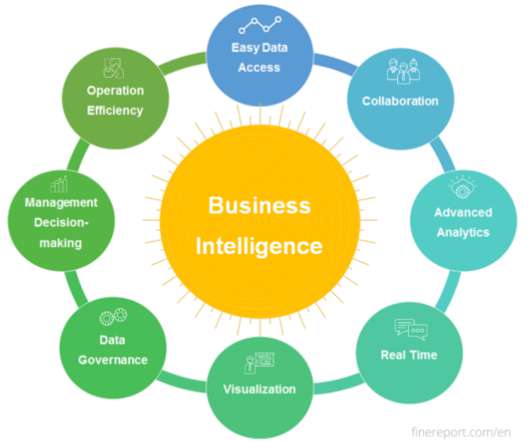

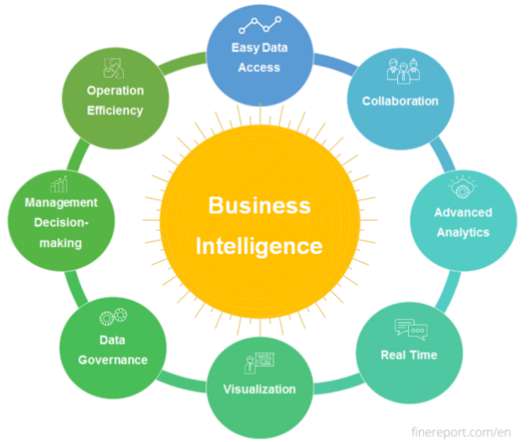

What is data analytics? Analyzing and managing data for decisions

CIO Business Intelligence

JUNE 7, 2022

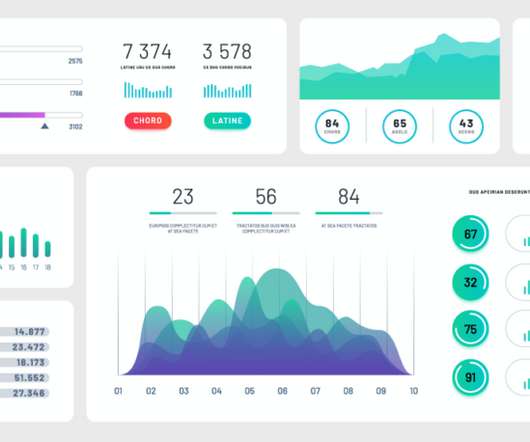

It comprises the processes, tools and techniques of data analysis and management, including the collection, organization, and storage of data. The chief aim of data analytics is to apply statistical analysis and technologies on data to find trends and solve problems. What are the four types of data analytics?

Let's personalize your content