Amazon Q data integration adds DataFrame support and in-prompt context-aware job creation

AWS Big Data

DECEMBER 20, 2024

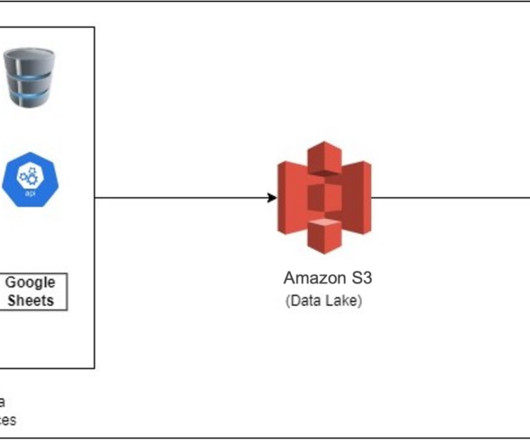

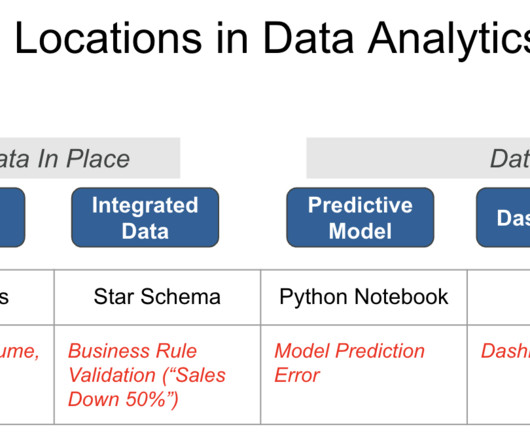

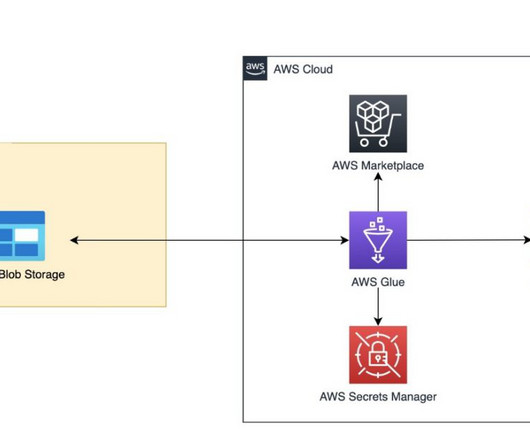

Amazon Q data integration , introduced in January 2024, allows you to use natural language to author extract, transform, load (ETL) jobs and operations in AWS Glue specific data abstraction DynamicFrame. In this post, we discuss how Amazon Q data integration transforms ETL workflow development.

Let's personalize your content