Data Integration: Strategies for Efficient ETL Processes

Analytics Vidhya

JUNE 3, 2024

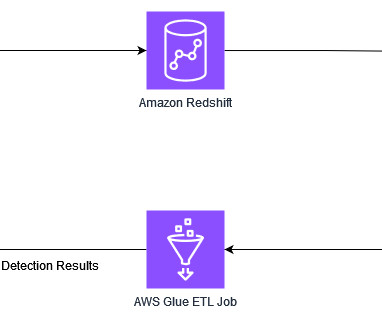

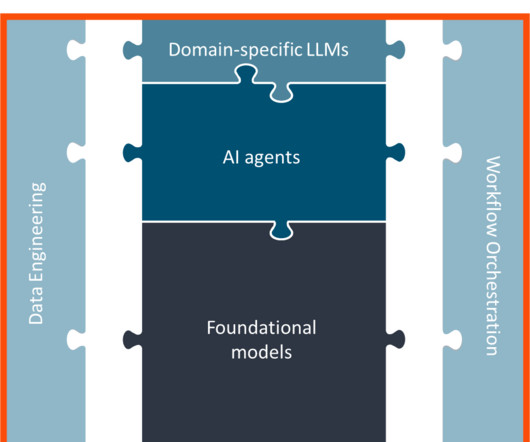

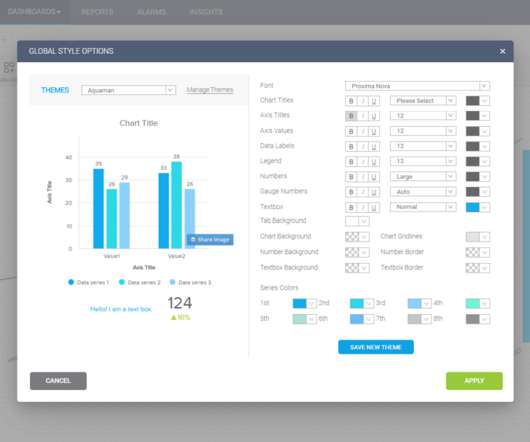

Introduction In today’s data-driven landscape, businesses must integrate data from various sources to derive actionable insights and make informed decisions. With data volumes growing at an […] The post Data Integration: Strategies for Efficient ETL Processes appeared first on Analytics Vidhya.

Let's personalize your content