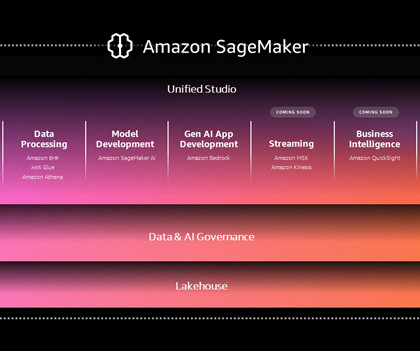

Simplify data integration with AWS Glue and zero-ETL to Amazon SageMaker Lakehouse

AWS Big Data

DECEMBER 4, 2024

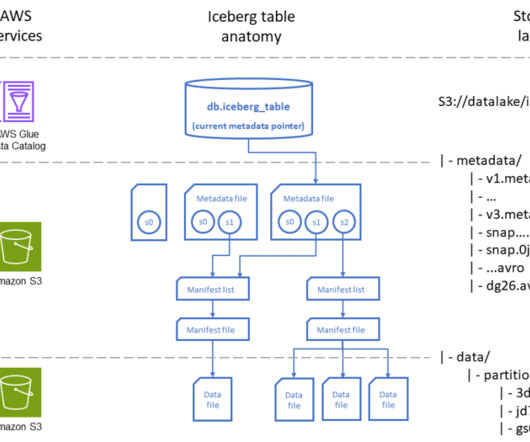

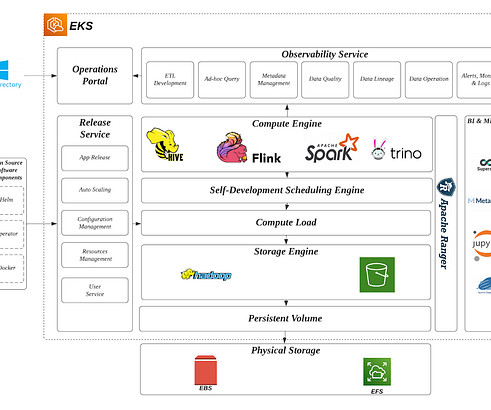

With the growing emphasis on data, organizations are constantly seeking more efficient and agile ways to integrate their data, especially from a wide variety of applications. By directly integrating with Lakehouse, all the data is automatically cataloged and can be secured through fine-grained permissions in Lake Formation.

Let's personalize your content