Monitoring Apache Iceberg metadata layer using AWS Lambda, AWS Glue, and AWS CloudWatch

AWS Big Data

JULY 29, 2024

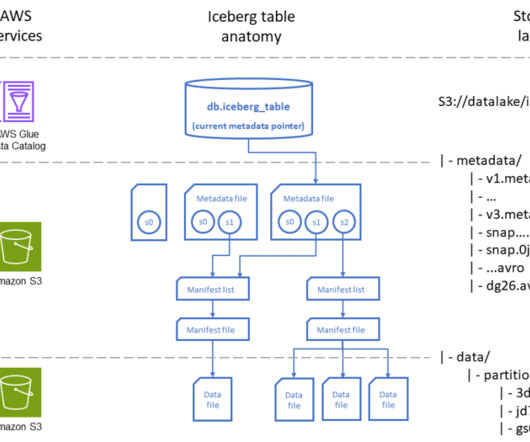

It addresses many of the shortcomings of traditional data lakes by providing features such as ACID transactions, schema evolution, row-level updates and deletes, and time travel. In this blog post, we’ll discuss how the metadata layer of Apache Iceberg can be used to make data lakes more efficient.

Let's personalize your content