Modernize your legacy databases with AWS data lakes, Part 2: Build a data lake using AWS DMS data on Apache Iceberg

AWS Big Data

OCTOBER 30, 2024

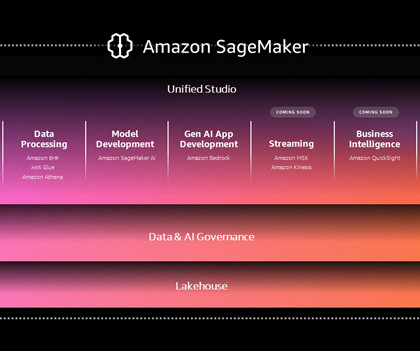

This is part two of a three-part series where we show how to build a data lake on AWS using a modern data architecture. This post shows how to load data from a legacy database (SQL Server) into a transactional data lake ( Apache Iceberg ) using AWS Glue. Delete the bucket.

Let's personalize your content