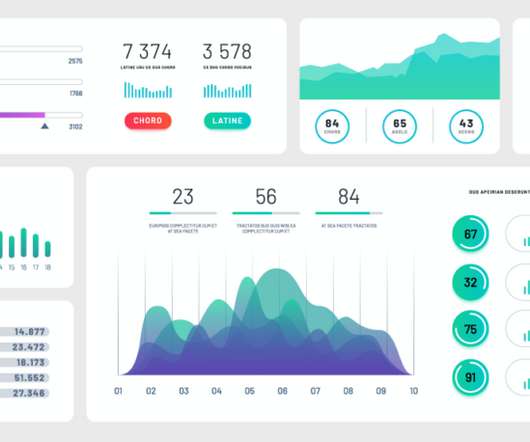

Data Warehouses, Data Marts and Data Lakes

Analytics Vidhya

JANUARY 7, 2022

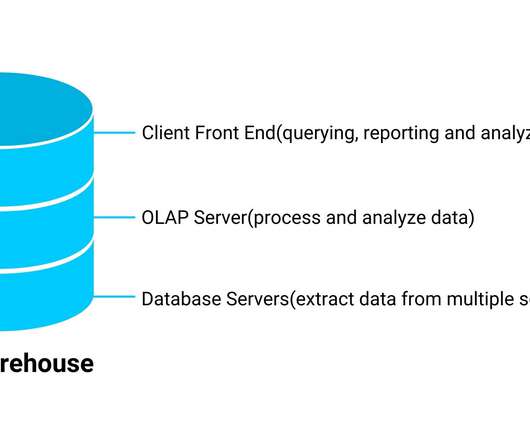

Introduction All data mining repositories have a similar purpose: to onboard data for reporting intents, analysis purposes, and delivering insights. By their definition, the types of data it stores and how it can be accessible to users differ.

Let's personalize your content