Orca Security’s journey to a petabyte-scale data lake with Apache Iceberg and AWS Analytics

AWS Big Data

JULY 20, 2023

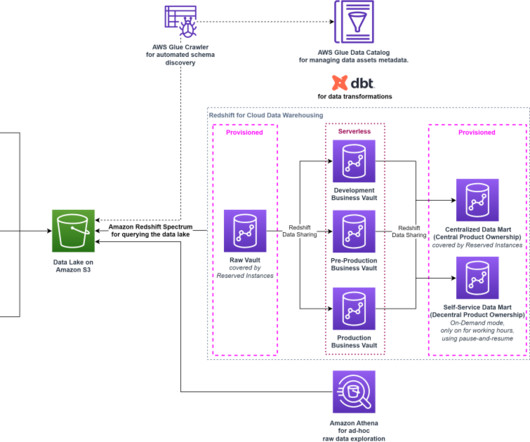

With data becoming the driving force behind many industries today, having a modern data architecture is pivotal for organizations to be successful. In this post, we describe Orca’s journey building a transactional data lake using Amazon Simple Storage Service (Amazon S3), Apache Iceberg, and AWS Analytics.

Let's personalize your content