From data lakes to insights: dbt adapter for Amazon Athena now supported in dbt Cloud

AWS Big Data

NOVEMBER 22, 2024

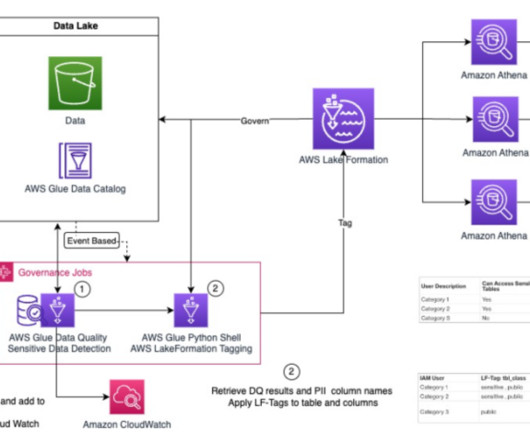

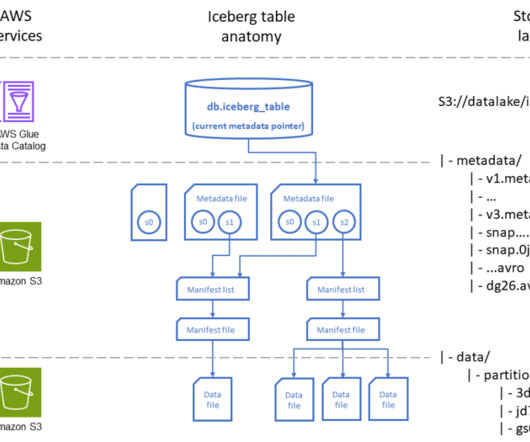

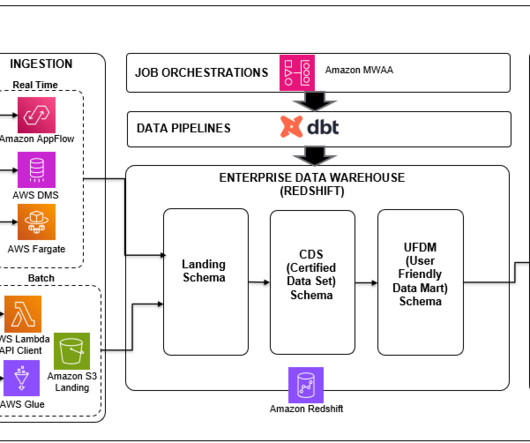

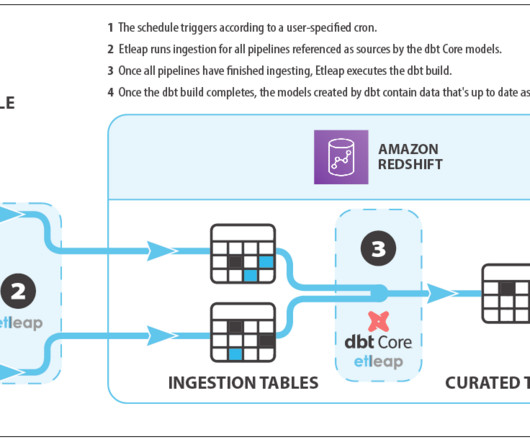

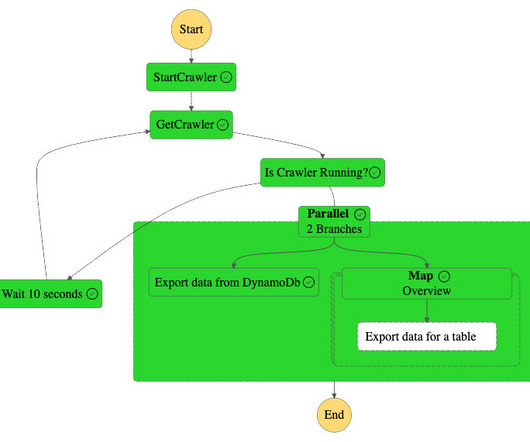

The need for streamlined data transformations As organizations increasingly adopt cloud-based data lakes and warehouses, the demand for efficient data transformation tools has grown. Using Athena and the dbt adapter, you can transform raw data in Amazon S3 into well-structured tables suitable for analytics.

Let's personalize your content