Expanding data analysis and visualization options: Amazon DataZone now integrates with Tableau, Power BI, and more

AWS Big Data

OCTOBER 30, 2024

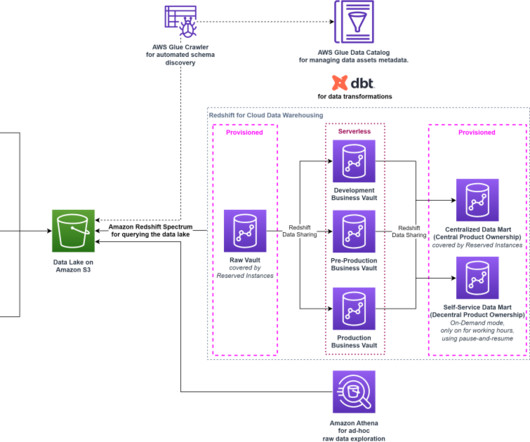

Amazon DataZone now launched authentication supports through the Amazon Athena JDBC driver, allowing data users to seamlessly query their subscribed data lake assets via popular business intelligence (BI) and analytics tools like Tableau, Power BI, Excel, SQL Workbench, DBeaver, and more.

Let's personalize your content