Run Apache XTable in AWS Lambda for background conversion of open table formats

AWS Big Data

NOVEMBER 26, 2024

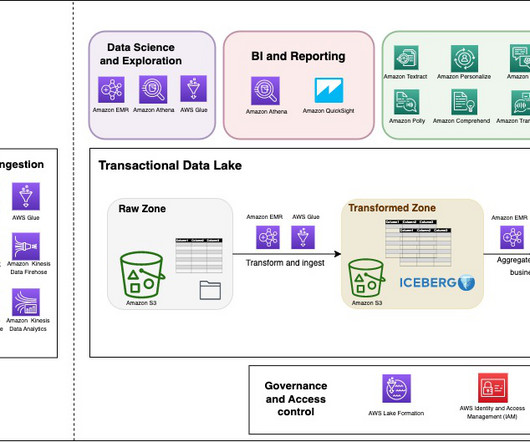

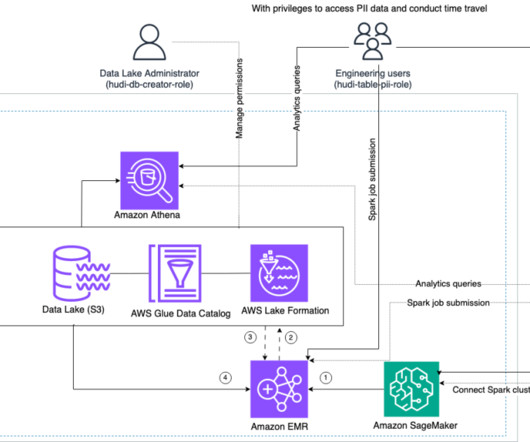

Initially, data warehouses were the go-to solution for structured data and analytical workloads but were limited by proprietary storage formats and their inability to handle unstructured data. Eventually, transactional data lakes emerged to add transactional consistency and performance of a data warehouse to the data lake.

Let's personalize your content