Complexity Drives Costs: A Look Inside BYOD and Azure Data Lakes

Jet Global

NOVEMBER 5, 2020

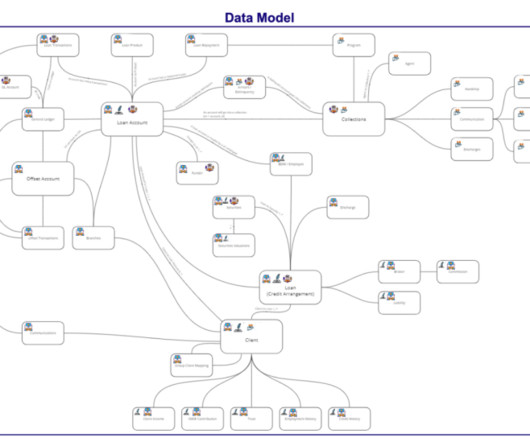

If your company is using Microsoft Dynamics AX, you’ll be aware of the company’s shift to Microsoft Dynamics 365 Finance and Supply Chain Management (D365 F&SCM). Option 3: Azure Data Lakes. This leads us to Microsoft’s apparent long-term strategy for D365 F&SCM reporting: Azure Data Lakes.

Let's personalize your content