Create an end-to-end data strategy for Customer 360 on AWS

AWS Big Data

MARCH 26, 2024

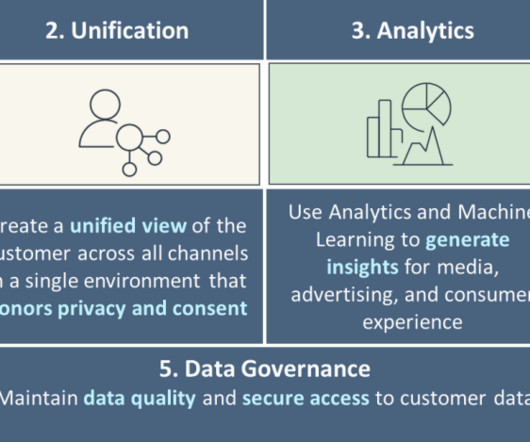

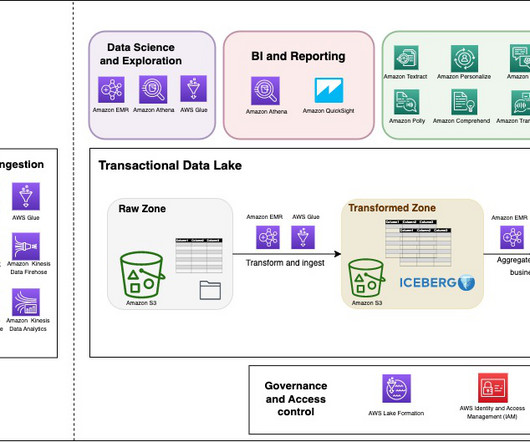

A Gartner Marketing survey found only 14% of organizations have successfully implemented a C360 solution, due to lack of consensus on what a 360-degree view means, challenges with data quality, and lack of cross-functional governance structure for customer data. Then, you transform this data into a concise format.

Let's personalize your content