How EUROGATE established a data mesh architecture using Amazon DataZone

AWS Big Data

JANUARY 15, 2025

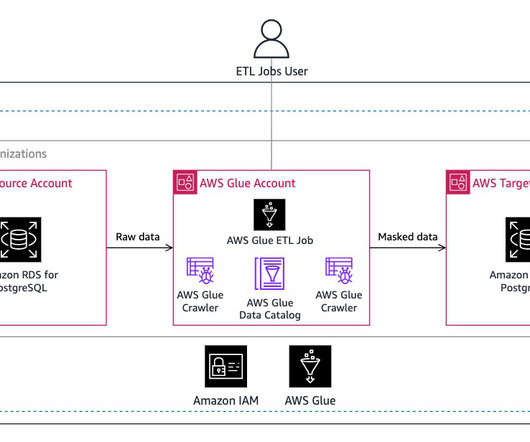

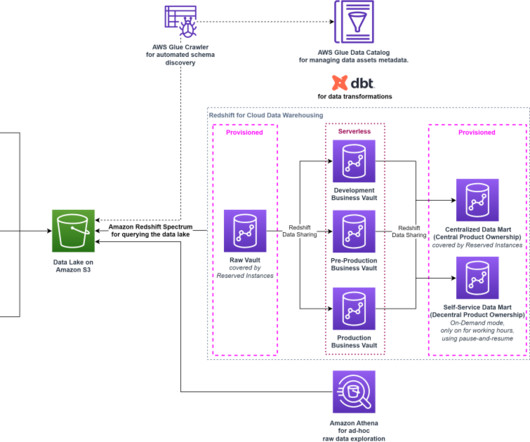

An extract, transform, and load (ETL) process using AWS Glue is triggered once a day to extract the required data and transform it into the required format and quality, following the data product principle of data mesh architectures. This process is shown in the following figure.

Let's personalize your content