10 Examples of How Big Data in Logistics Can Transform The Supply Chain

datapine

MAY 2, 2023

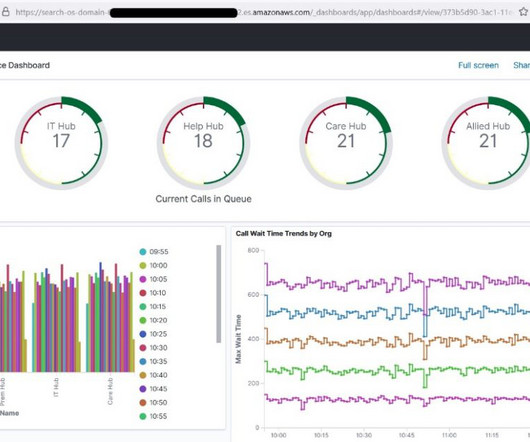

In addition to driving operational efficiency and consistently meeting fulfillment targets, logistics providers use big data applications to provide real-time updates as well as a host of flexible pick-up, drop-off, or ordering options. Use our 14-days free trial today & transform your supply chain!

Let's personalize your content