SAP Datasphere Powers Business at the Speed of Data

Rocket-Powered Data Science

MARCH 20, 2023

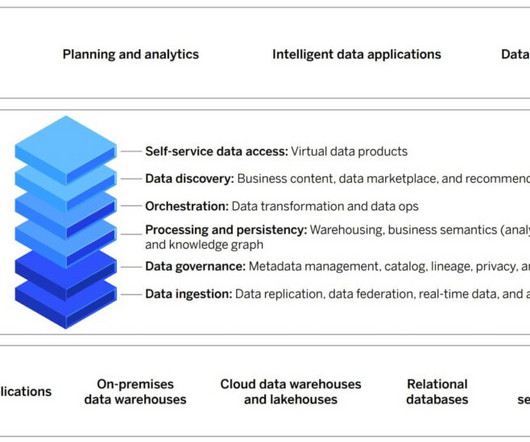

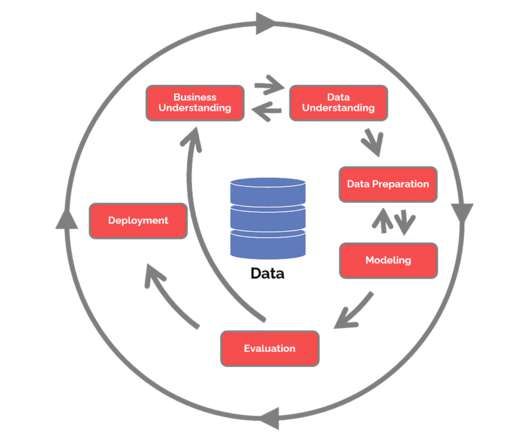

Data collections are the ones and zeroes that encode the actionable insights (patterns, trends, relationships) that we seek to extract from our data through machine learning and data science. Datasphere is a data discovery tool with essential functionalities: recommendations, data marketplace, and business content (i.e.,

Let's personalize your content