Talend Data Fabric Simplifies Data Life Cycle Management

David Menninger's Analyst Perspectives

NOVEMBER 16, 2021

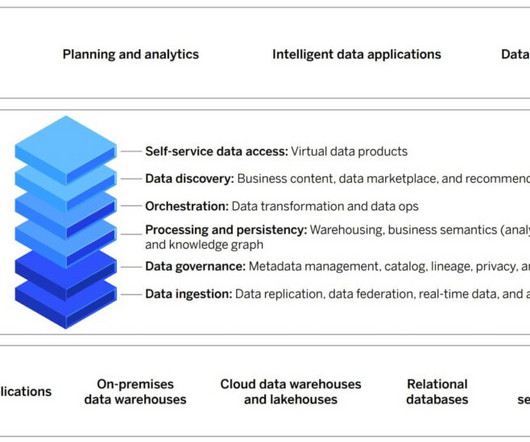

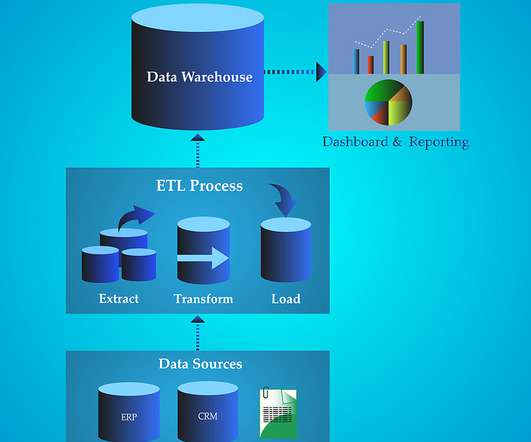

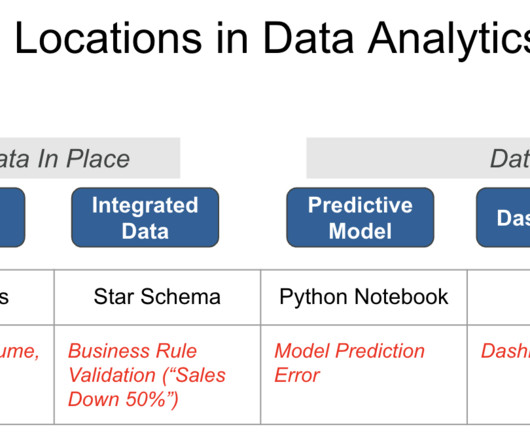

Talend is a data integration and management software company that offers applications for cloud computing, big data integration, application integration, data quality and master data management. Its code generation architecture uses a visual interface to create Java or SQL code.

Let's personalize your content