Announcing Open Source DataOps Data Quality TestGen 3.0

DataKitchen

FEBRUARY 20, 2025

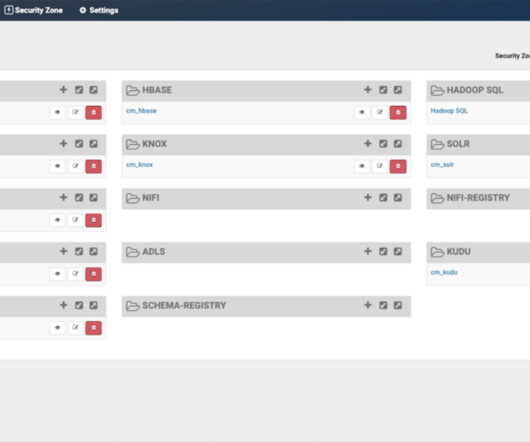

Announcing DataOps Data Quality TestGen 3.0: Open-Source, Generative Data Quality Software. You don’t have to imagine — start using it today: [link] Introducing Data Quality Scoring in Open Source DataOps Data Quality TestGen 3.0! DataOps just got more intelligent.

Let's personalize your content