Unbundling the Graph in GraphRAG

O'Reilly on Data

NOVEMBER 19, 2024

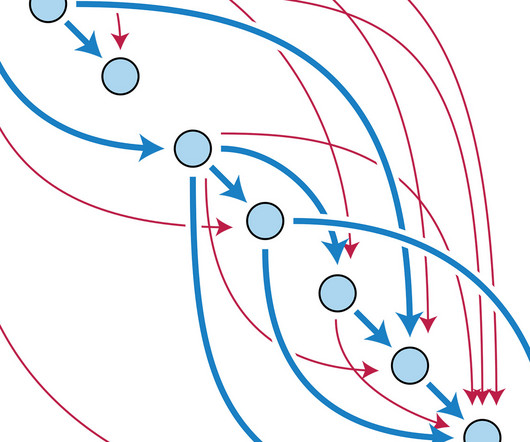

decomposes a complex task into a graph of subtasks, then uses LLMs to answer the subtasks while optimizing for costs across the graph. A generalized, unbundled workflow A more accountable approach to GraphRAG is to unbundle the process of knowledge graph construction, paying special attention to data quality.

Let's personalize your content