Managing machine learning in the enterprise: Lessons from banking and health care

O'Reilly on Data

JULY 15, 2019

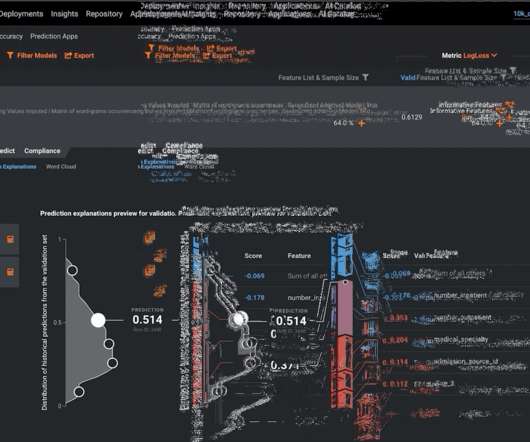

After the 2008 financial crisis, the Federal Reserve issued a new set of guidelines governing models— SR 11-7 : Guidance on Model Risk Management. Note that the emphasis of SR 11-7 is on risk management.). Sources of model risk. Model risk management. AI projects in financial services and health care.

Let's personalize your content