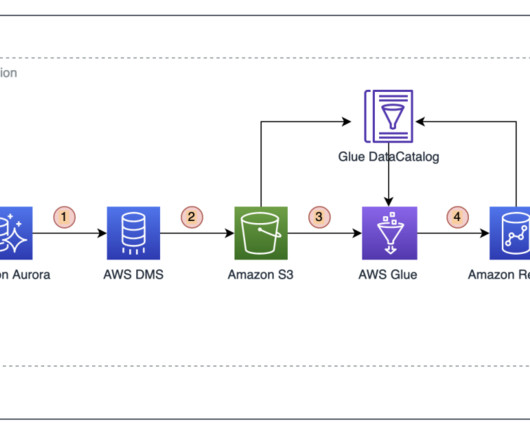

How ANZ Institutional Division built a federated data platform to enable their domain teams to build data products to support business outcomes

AWS Big Data

DECEMBER 4, 2024

This post explores how the shift to a data product mindset is being implemented, the challenges faced, and the early wins that are shaping the future of data management in the Institutional Division. About the Authors Leo Ramsamy is a Platform Architect specializing in data and analytics for ANZ’s Institutional division.

Let's personalize your content